Qualitative UX Research · Strategy Design · Case Study

Guiding Localization Strategies in GenAI-Driven Content

Project Overview

When I look back at this project, what stands out isn’t just the findings—it’s the journey. A small pilot quickly grew into a multi-phase, multi-market study, and along the way, I stepped into the role of Lead UX Researcher to drive it end-to-end.

I had first joined as an associate researcher during the pilot (Phase 1). But when the lead dropped out, I took over as Lead UX Researcher from Phase 2 onward. From there, I managed the research end-to-end—balancing exploratory and evaluative methods, coordinating with five internal teams, and serving as the bridge to the client’s product and marketing stakeholders.

This case study reflects not only the insights we uncovered, but also how I grew into the role of Lead UX Researcher while managing a truly global program.

About the project

Client Background & Challenge

Our client supported 16 languages across their global web properties. But the level of localization varied dramatically—some languages were fully localized, others only partially, and some not at all.

The challenge? They had no real data on how users around the world actually thought, felt, and acted toward translated web assets. While backend operations relied heavily on machine translation, GenAI, and translation-management tools, these approaches did not reveal whether localized experiences truly resonated with end users. That gap in understanding drove the need for dedicated UX research across key markets.

Our Research Objectives

Without understanding how their localization efforts are perceived, the client had no way to prioritize improvements or defend localization investments internally. To close that gap, we set three objectives:

The How - Research Process

We structured the project around a repeatable three-stage process:

Discovery & Planning: Scoped through workshops, audits, and research planning.

Design & Execution: Built surveys and interview protocols, recruited participants, and gathered data.

Synthesis & Delivery: Analyzed results, created personas and journey maps, and delivered actionable recommendations.

Each round refined the process further, giving us consistency across markets but flexibility for local contexts.

Grounding the Research

Within that process, we grounded ourselves in a few key questions.

To answer these, we defined five UX pillars as our evaluation framework:

Language – The quality and clarity of translations in the native language.

Terminology – How accessible and understandable the technical vocabulary is.

Content – Whether the translated material is comprehensive and complete.

Tone – The overall character and attitude the messaging conveys.

Navigation – How easily users can find the information they need.

This gave us a structured way to evaluate not only translation quality, but the broader user experience across languages.

Scaling Across 13 markets

We applied this framework across 13 markets, tiered by the client’s own system:

This tiered approach allowed us to adapt research goals—for example, in non-localized markets, we focused on tolerance for English, while in fully localized markets, we evaluated translation quality and terminology consistency. So while the overall plan stayed consistent across phases, it was also flexible enough to adapt to each tier’s context.

Designing the Studies

Once the goals, frameworks and scope were set, we moved on to designing the studies. After each round, we refined surveys and interview protocols—updating questions based on new findings and tailoring them to each tier’s needs.

We used a mixed methods approach because we wanted to understand both the what and the why behind user behaviors. We led with in-depth interviews to surface attitudes, pain points, and motivations, and then used surveys to validate those patterns and broaden our view at scale.

Early on—especially in the pilot phase—our surveys leaned heavily on open-ended questions. They carried the flavor of an unmoderated task review, asking participants to describe their actual tasks and language experiences. As the study matured across phases, we gradually shifted toward more structured formats like multiple choice, balancing exploration with comparability.

That’s also why the sample size was intentionally small—about 20 responses per language. The goal wasn’t to run a large-scale quant study, but to complement interviews with task-based, open-ended feedback we could benchmark across the five UX pillars.

Recruiting Users

We targeted a balanced sample:

Customer type: 50% new, 50% existing.

User roles: evenly distributed across Business Decision Makers, Technical Leaders, and Technical Users.

All participants were native speakers (with bilinguals included when English was necessary). Recruitment was run in partnership with our internal recruiting team via Oneforma, with Respondent filling in niche roles. Clear criteria, a live tracker, and screening against linguists (to avoid bias) ensured both speed and quality.

Executing the Research

In total, we collected 260 survey responses and ran 100 interviews. I personally moderated 50 of those sessions and supported the rest as a note taker.

For tools, we used Aidaform to design and distribute surveys, and Lookback to host and record remote interviews.

Making Sense of the Data

The analysis happened in layers.

For surveys, I combined quantitative analysis (Excel charts, stats) with qualitative coding (themes in Mural, grouped by UX pillars and user activities).

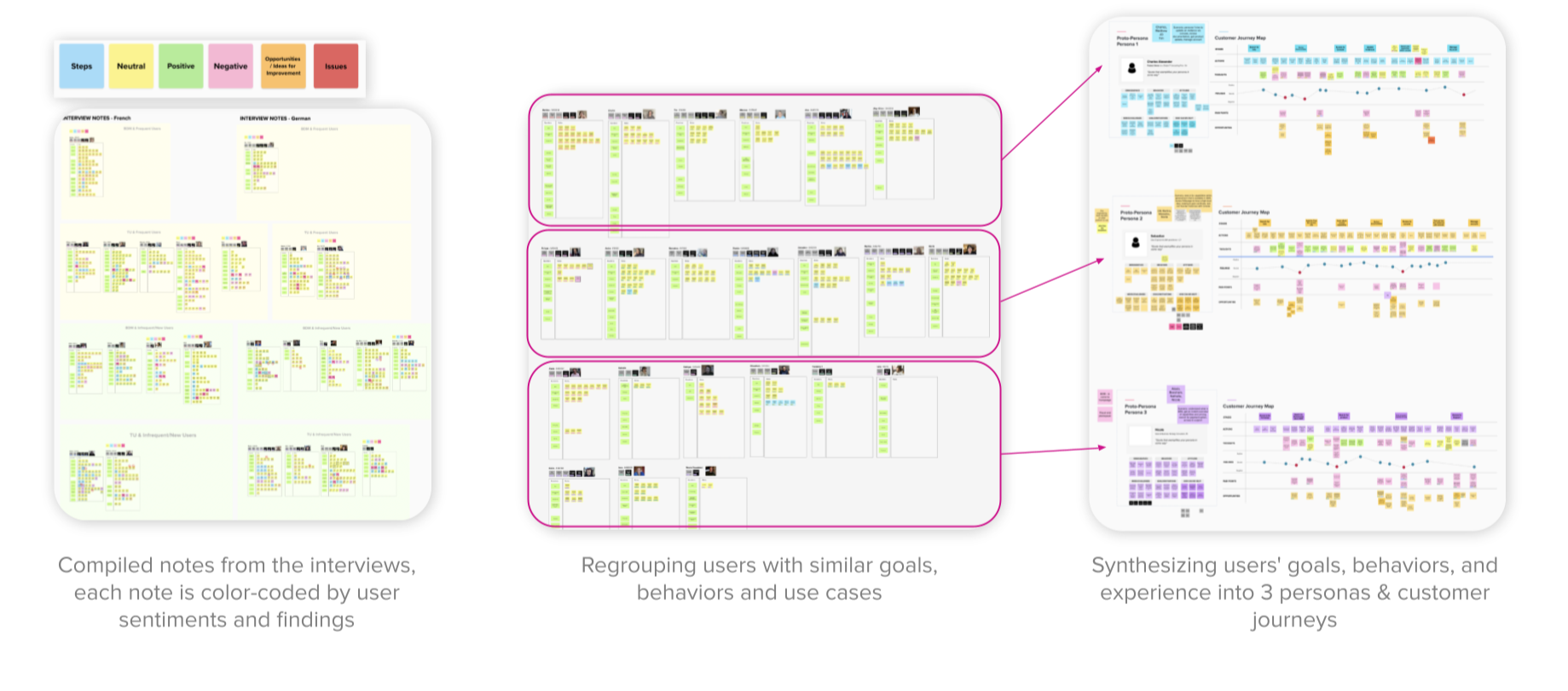

For interviews, Notes were color-coded by sentiment and grouped into clusters of goals, behaviors, and pain points.

From there, we synthesized findings into:

Three Personas

Each reflecting distinct goals, behaviors, and language attitudes.

2. Journey maps

Layering behavioral data (what users do) with perceptual data (what they feel). We visualized how users switch between consoles, docs, and sites—sometimes by choice, sometimes due to missing localized content. Quotes were embedded to keep the human voice front and center.

The What - Findings & Beyond

Insights That Mattered

Three key themes surfaced consistently across markets:

Awkward or inconsistent translations reduce trust.

“Sometimes I sense it's like a machine translation, it's not natural. I don't like using those pages. I switch back to English at that point. If it's machine translation it's a lot harder to understand it.”

Inconsistent terminology hinders efficiency.

“I often encounter terminologies that are unfamiliar to native speaking users. It took me some time to understand them. Also, The translation across pages is inconsistent ... I don't see a systematical approach about terminology translation on the platform.”

Missing translations frustrate less tech-savvy users.

“For senior engineers who are more used to seeing content in English, they can search for what they need without much difficulty. However, the problem becomes critical for junior engineers or those who are not used to reading technical content in English. They may struggle."

Taken together, this made it clear: translation quality is not just a language issue—it directly impacts credibility, productivity, and inclusiveness.

Deliverables

We packaged insights in multiple formats to ensure impact across teams:

Written reports for cross-team sharing, Visual decks with infographics, personas, and journey maps and Raw data packages with translated survey responses and interview transcripts.

This flexibility made the findings not just clear, but immediately usable.

Driving Impact

The project didn’t just end with findings—it reshaped how AWS approached localization.

Our research showed the ROI of localization, which led AWS to establish quarterly research cycles. We then designed a Customer Satisfaction (CSAT) study tailored to their context.

Now, AWS continuously tracks satisfaction and refines their localization strategy—making global content decisions truly data-driven.

More importantly, this work laid the foundation for a stronger partnership. By proving the value of localization research, we became a trusted partner in shaping their ongoing global strategy.

“The structured interviews, paired with themed takeaways like customer quotes and examples, provided valuable insights and were a standout aspect of the project.”

— Product Manager, AI/ML Localization Solutions

“The team’s excellent communication cadence, including weekly check-ins, quick responses, and clear updates, ensured smooth collaboration and project alignment.”

— Sr. Manager, EMEA Marketing Content & Localization Program

Reflections

Looking back, there are things I would improve.

Survey design: Some questions were too long or ambiguous. Next time, I’d streamline and pilot test translations more rigorously.

Interviews: Cultural and linguistic nuances sometimes limited depth. I’d prepare more localized materials and balance novice vs. expert users.

Deliverables: While insights were strong, I’d tie them more explicitly to business KPIs and make visuals even more intuitive.

But overall, this project was a turning point—for me and for the client. It showed the strategic value of UX research in localization and how thoughtful methods can bridge the gap between language and experience.